The Challenge

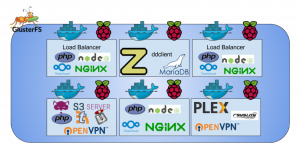

I wanted to try out Docker in production to really understand it. I believe that to fully understand or master something, you must make it part of your life. Containers are the next buzz word in IT and future employment opportunities are likely to prefer candidates with container experience. My current home environment consists of a single server running: OpenVPN, Email, Plex Media Server, Nginx, Torrent client, IRC bouncer, and a Samba server. The challenge is to move each of these to Docker for easier deployment.

The Journey

I noticed that many Docker containers contain multiple files that are added to the container upon creation. I decided to take a simpler approach and have the Dockerfile modify most of the configuration files with the sed utility and also create the startup script. I think that for most cases, having a single file to keep track of and build your system is much easier than editing multiple files. In short, the Dockerfile file should be the focal point of the container creation process for simpler administration.

Sometimes it is easier to upload prebuilt configuration files and additional support files for an application. For example, the Nginx configuration file is simpler to edit manually and have Docker put it in the correct location upon creation. Finally, there is no way around importing existing SSL certificates for Nginx in the Dockerfile.

Creating an Email server was the most difficult container to create because I had to modify configuration files for many components such as SASL, Postfix, Dovecot, creating the mail users, and setting up the alias for the system. Also, Docker needed to be cleaned often because it consumes large amounts of disk space for each container made. Dockerfiles with many commands took very long to execute. Testing out a few changes in a Dockerfile and rebuilding the container took a long time. Amplify that by the many containers I made and the many typos and you can see how my weekend disappeared.

After my home production environment moved to Docker and is working well, I created a BitBucket account to store all of my Docker files and MySQL backups to. There is a cron job inside the container that does a database dump to a shared external folder. If my system ever died, I can setup the new system easier by cloning the Git repository and building the container with a single command.

Conclusion

In conclusion, Docker was hard to deploy initially, but will save time in the future if disasters happen such as a system failure. Dockerfiles can basically be used as a well-documented blue print of a perfect application or environment. For continuity purposes in organizations, Dockerfiles can be shared and administrators should easily be able to understand what needs to be done. Even if you do not like Docker, you can basically copy and paste the contents of a Dockerfile with little text removal and build the perfect system.